I started this, hum, thing on a Friday evening, around 9 pm, half-watching something on Netflix I can’t remember. There was not really a plan; I just wanted a kind of auto-simulation with a galaxy, stars, and planets to click on for plenty of stats… and, of course, CIVILIZATIONS inside.

By 2 am, multiple versions of the code already existed, thanks to OpenAI CODEX, but it was going somewhere I hadn’t expected at all. I went to sleep feeling a bit confused, somewhat satisfied, and very, very, very, far from where I thought I would end up.

The weekend did the rest. Ten more hours, maybe more, I don’t remember. The project drifted further and further away from my initial ideas. At some point, tired enough to stop overthinking names, I picked something deliberately vague and grand: Transcendence. It sounded <<< large >>> to absorb whatever this thing was becoming. In retrospect, it fits disturbingly well.

Okay, so the original question was simple: “Can a large language model simulate a system on its own?” Not just the narrative part, but the full simulation. Not only to make it look good, but the question was also, could it actually simulate dynamics, cause and effect, and decay. The answer came quickly on Saturday: “No”, it -works-, but, come on, it’s so boring.

Left alone, the model smooths everything out! Conflict arises but then vanishes thanks to some magical power. Crises turn into growth opportunities, then nothing changes. Nothing ever truly collapses. It’s coherent, readable, consistent, and… yes, you get it: deeply uninteresting. Adding rules didn’t fix that. As long as the model holds any form of “power”, it would slowly reinterpret, bend, or soften those rules until the system felt comfortable again.

That’s when it became clear that the issue wasn’t about having too much or too little complexity. It was the amount of power granted to the LLMs.

The turning point was brutally simple: I had to remove the omniscience! Mhouahahaha.

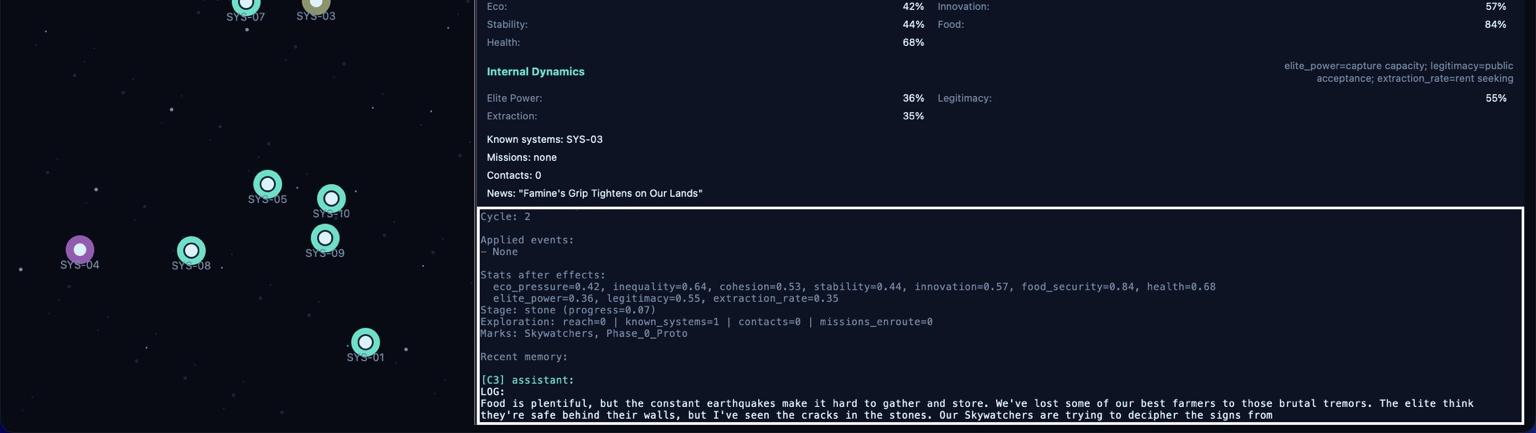

By removing the control, I will let the rules decide everything and forbid the LLM from doing anything except observing and talking. No massive decisions or deep optimizations, nor a global view. Just local experience and interpretation based on a few pieces of information given at the start of each cycle.

Once this strategy is in place, things start to get better!

The system stopped trying to make everything more efficient, of course; he was not allowed to do so. Then it began behaving differently. My small in-silico civilizations no longer depicted the world as it was but as they believed it to be, or more, as they experienced it. Even when nothing significant occurred, no events, no collapses, no breakthroughs, the narrative LLM never went quiet. Hey, LLM was designed to talk. Memory, fear, and scars filled in the gaps. The LLM wasn’t simulating reality; it was simulating what could be in the minds of the civilization (more or less successfully).

And that was, no doubt, far more funny!

On one side, the rules decide what happens and then the LLMs decides how it feels.

Agendas shift between survival and exploration not because a strategy changed, but because statistics crossed unknown thresholds for the Civs. They proudly announce new phases right before being dragged back into scarcity. Optimism and decline coexist without irony, because no one involved has access to the full picture.

Of course, all of this was more or less embedded in the prompts from the start. I just didn’t fully realize what it implied until I saw it run. That’s probably the most honest outcome of the experiment. I can say that the system works at 100%, but I will keep my own definition of what it means for a system to work at 100% ;).

Quick tech note: the LLM runs locally through Ollama, and I tested it with a small Mistral model. It’s not meant to impress, but it’s pretty cool to have it offline on my MacBook Air. It’s there to make my civilization interesting—you can even test the pseudo-simulation without an LLM, though it’s definitely less compelling (check out the Obituaries when all your poor civilizations have died, with the LLM summarizing their lives).

This experiment, completed in just a few hours with AI support, is the kind of use that really matters to me. It doesn’t replace my analysis, concepts, guidance, or creativity, nor does it give me answers, but it shortens the gap between my hypothesis and my first meaningful failure.

I’m not selling anything. I’m not creating a product. The code is publicly available, the logs are accessible, and the tiny insilico universes you create will still end, sooner or later, according to the rulesets you define.

Full code (Python) on GitHub: https://github.com/nkiu/Transcendence

You will need a computer (Windows or MacOS) with some Gigs of RAM (at least 16 GB, it’s better), OLLAMA, and the LLM you want, unless you want to stick to the LLM free mode (I have tried with Mistral:7b and LLAMA3:8b)